[ad_1]

Allen, a data scientist, and Massachi, a software engineer, worked for nearly four years at Facebook on some of the uglier aspects of social media, combating scams and election meddling. They didn’t know each other but both quit in 2019, frustrated at feeling a lack of support from executives. “The work that teams like the one I was on, civic integrity, was being squandered,” Massachi said in a recent conference talk. “Worse than a crime, it was a mistake.”

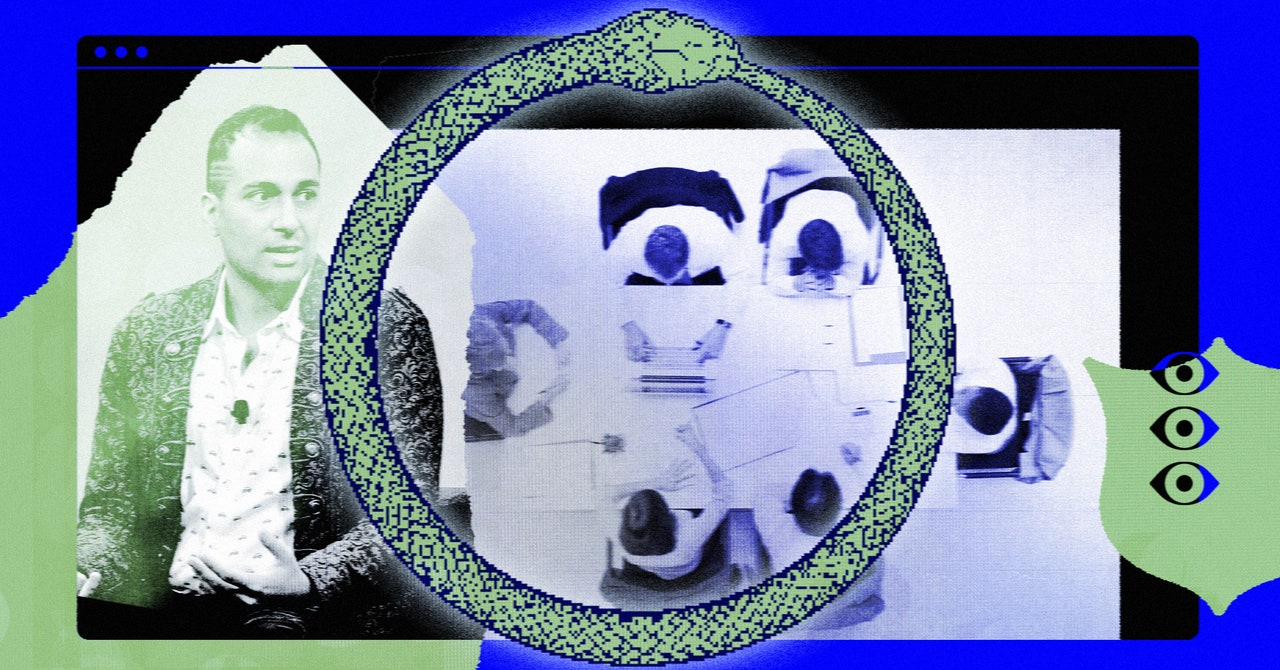

Massachi first conceived the idea of using expertise like that he’d developed at Facebook to drive greater public attention to the dangers of social platforms. He launched the nonprofit Integrity Institute with Allen in late 2021, after a former colleague connected them. The timing was perfect: Frances Haugen, another former Facebook employee, had just leaked a trove of company documents, catalyzing new government hearings in the US and elsewhere about problems with social media. It joined a new class of tech nonprofits such as the Center for Humane Technology and All Tech Is Human, started by people working in industry trenches who wanted to become public advocates.

Massachi and Allen infused their nonprofit, initially bankrolled by Allen, with tech startup culture. Early staff with backgrounds in tech, politics, or philanthropy didn’t make much, sacrificing pay for the greater good as they quickly produced a series of detailed how-to guides for tech companies on topics such as preventing election interference. Major tech philanthropy donors collectively committed a few million dollars in funding, including the Knight, Packard, MacArthur, and Hewlett foundations, as well as the Omidyar Network. Through a university-led consortium, the institute got paid to provide tech policy advice to the European Union. And the organization went on to collaborate with news outlets, including WIRED, to investigate problems on tech platforms.

To expand its capacity beyond its small staff, the institute assembled an external network of two dozen founding experts it could tap for advice or research help. The network of so-called institute “members” grew rapidly to include 450 people from around the world in the following years. It became a hub for tech workers ejected during tech platforms’ sweeping layoffs, which significantly reduced trust and safety, or integrity, roles that oversee content moderation and policy at companies such as Meta and X. Those who joined the institute’s network, which is free but involves passing a screening, gained access to part of its Slack community where they could talk shop and share job opportunities.

Major tensions began to build inside the institute in March last year, when Massachi unveiled an internal document on Slack titled “How We Work” that barred use of terms including “solidarity,” “radical,” and “free market,” which he said come off as partisan and edgy. He also encouraged avoiding the term BIPOC, an acronym for “Black, Indigenous, and people of color,” which he described as coming from the “activist space.” His manifesto seemed to echo the workplace principles that cryptocurrency exchange Coinbase had published in 2020, which barred discussions of politics and social issues not core to the company, drawing condemnation from some other tech workers and executives.

“We are an internationally-focused open-source project. We are not a US-based liberal nonprofit. Act accordingly,” Massachi wrote, calling for staff to take “excellent actions” and use “old-fashioned words.” At least a couple of staffers took offense, viewing the rules as backward and unnecessary. An institution devoted to taming the thorny challenge of moderating speech now had to grapple with those same issues at home.