[ad_1]

Bilingual AI Brain Implant Helps Stroke Survivor Converse in Both Spanish and English

A first-of-a-kind AI system enables a person with paralysis to communicate in two languages

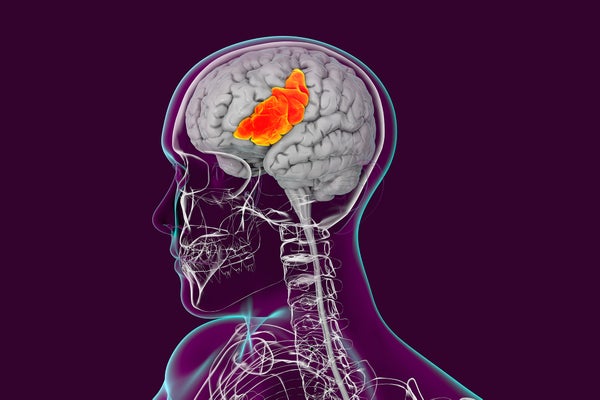

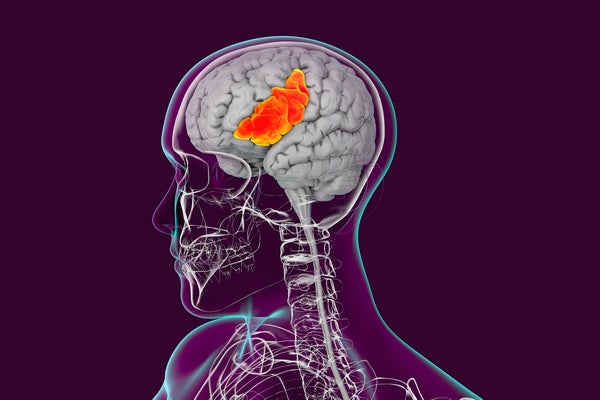

Human brain with highlighted inferior frontal gyrus, part of the prefrontal cortex and the location of Broca’s area, which is involved in language processing and speech production.

Kateryna Kon/Science Photo Library/Getty Images

For the first time, a brain implant has helped a bilingual person who is unable to articulate words to communicate in both of his languages. An artificial-intelligence (AI) system coupled to the brain implant decodes, in real time, what the individual is trying to say in either Spanish or English.

The findings, published on 20 May in Nature Biomedical Engineering, provide insights into how our brains process language, and could one day lead to long-lasting devices capable of restoring multilingual speech to people who can’t communicate verbally.

“This new study is an important contribution for the emerging field of speech-restoration neuroprostheses,” says Sergey Stavisky, a neuroscientist at the University of California, Davis, who was not involved in the study. Even though the study included only one participant and more work remains to be done, “there’s every reason to think that this strategy will work with higher accuracy in the future when combined with other recent advances”, Stavisky says.

On supporting science journalism

If you’re enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Speech-restoring implant

The person at the heart of the study, who goes by the nickname Pancho, had a stroke at age 20 that paralysed much of his body. As a result, he can moan and grunt but cannot speak clearly. In his thirties, Pancho partnered with Edward Chang, a neurosurgeon at the University of California, San Francisco, to investigate the stroke’s lasting effects on his brain. In a groundbreaking study published in 2021, Chang’s team surgically implanted electrodes on Pancho’s cortex to record neural activity, which was translated into words on a screen.

Pancho’s first sentence — ‘My family is outside’ — was interpreted in English. But Pancho is a native Spanish speaker who learnt English only after his stroke. It’s Spanish that still evokes in him feelings of familiarity and belonging. “What languages someone speaks are actually very linked to their identity,” Chang says. “And so our long-term goal has never been just about replacing words, but about restoring connection for people.”

To achieve this goal, the team developed an AI system to decipher Pancho’s bilingual speech. This effort, led by Chang’s PhD student Alexander Silva, involved training the system as Pancho tried to say nearly 200 words. His efforts to form each word created a distinct neural pattern that was recorded by the electrodes.

The authors then applied their AI system, which has a Spanish module and an English one, to phrases as Pancho tried to say them aloud. For the first word in a phrase, the Spanish module chooses the Spanish word that matches the neural pattern best. The English component does the same, but chooses from the English vocabulary instead. For example, the English module might choose ‘she’ as the most likely first word in a phrase and assess its probability of being correct to be 70%, whereas the Spanish one might choose ‘estar’ (to be) and measure its probability of being correct at 40%.

Word for word

From there, both modules attempt to build a phrase. They each choose the second word based on not only the neural-pattern match but also whether it is likely to follow the first one. So ‘I am’ would get a higher probability score than ‘I not’. The final output produces two sentences — one in English and one in Spanish — but the display screen that Pancho faces shows only the version with the highest total probability score.

The modules were able to distinguish between English and Spanish on the basis of the first word with 88% accuracy and they decoded the correct sentence with an accuracy of 75%. Pancho could eventually have candid, unscripted conversations with the research team. “After the first time we did one of these sentences, there were a few minutes where we were just smiling,” Silva says.

Two languages, one brain area

The findings revealed unexpected aspects of language processing in the brain. Some previous experiments using non-invasive tools have suggested that different languages activate distinct parts of the brain. But the authors’ examination of the signals recorded directly in the cortex found that “a lot of the activity for both Spanish and English was actually from the same area”, Silva says.

Furthermore, Pancho’s neurological responses didn’t seem to differ much from those of children who grew up bilingual, even though he was in his thirties when he learnt English — in contrast to the results of previous studies. Together, these findings suggest to Silva that different languages share at least some neurological features, and that they might be generalizable to other people.

Kenji Kansaku, a neurophysiologist at Dokkyo Medical University in Mibu, Japan, who was not involved in the study, says that in addition to adding participants, a next step will be to study languages “with very different articulatory properties” to English, such as Mandarin or Japanese. This, Silva says, is something he’s already looking into, along with ‘code switching’, or the shifting from one language to another in a single sentence. “Ideally, we’d like to give people the ability to communicate as naturally as possible.”

This article is reproduced with permission and was first published on May 21, 2024.